Solutions

Diagnostic Assessment of BIT and Sensors

Built-in-Test (BIT) is broadly used as a method to assist Design for Test (DFT) activities for digital designs during production. It may also provide a means to facilitate operational “testing” for the proper functioning of specific portions of a design prior to, or during operation.

Typically, BIT is designed to solve a requirement at lower levels of the design usually levied upon the specific design, subassembly or CCA. This lower-level design requirement can be ascertained through a quality checkout of that design piece by exercising as many functions in that design and by validating the proper output of those functions within the context of that design piece. This is not too challenging to perform for any independent design, which is merely a specific piece of the integrated design hierarchy.

What is not always available to the independent designer or to the design partnering activities, is how to best use BIT to report on the manifesting of any functional or failure of any additional “integrated” designs at the next higher levels of the operational asset. Without a mechanism to track all functional or failure propagations in any particular state of operation throughout complex integrated systems designs, optimizing BIT placement is reduced to educated guesswork.

BIT (test point) location has often been selected by the designer based upon his desired utility of the BIT as required for the acceptance testing of his specific design. Unless otherwise required by the systems integrator, the designer is typically not accountable to meeting logistical requirements at the operational asset level. This is primarily because each independent design within the larger system may have independent logistical support requirements, if any. If a more integrated design approach is required at the integrated systems level, then the determining of the best use of BIT would require additional interdisciplinary expertise, collaboration, tools and additional resources.

Furthermore, assessing and optimizing the inherent diagnostic capability of BIT will only determine the inherent diagnostic capability of the specific design. This should also occur at the “integrated” levels of the design hierarchy that will consider an integrated analysis at all design levels. From this point, the BIT may be refined, or optimized to report (“test”) where diagnostic utility could potentially be achieved. This is a simple assessment in eXpress, and, whereby any educated guesswork is removed.

As this diagnostic detail becomes readily available, the design team and the systems integrator are able to objectively assess the “effectiveness” and “sustainment value” of the BIT, not simply for the design piece at hand, but for the inclusion of that design piece into a much larger integrated system.

Higher levels of the diagnostic design will typically utilize the same lower level BIT to form more comprehensive assessments at the higher levels of the system architecture. This becomes tricky because the lower level BIT is spec’d to validate a certain percentage of the functions on their respective design pieces and the next higher levels of the design(s) may not be able to determine which functions are not able to be fully assessed during design development.

How do we really “know” what our BIT is testing?

The short answer is, without the knowledge of the Test Coverage of all of the BIT throughout each design piece within the design, we lack sufficient evidence to prove which functions are being tested and which are not being thoroughly testing at higher levels of the “integrated” design.

Even the most advanced and sophisticated Computer-Aided Design (CAD) tools used today, lack the ability to determine the test coverage of the BIT at higher levels of the design in many instances. Multiple design pieces designed by multiple design teams using independent design tools and levels of preference and expertise typically begin the clouding of the BIT test coverage as design pieces are integrated. As the collective design pieces spill over into areas where design domains (electronic, mechanical, hydraulic, optic, etc.) begin to cross paths, the BIT test coverage becomes increasingly suspect – to a fault. This is just the beginning of the falling into the No Fault Found (NFF) and Can Not Duplicate (CND) quicksand.

Design for Test (DFT) and Designing for Testability (DFT):

These are two related but separate endeavors. The Design for Test (DfT) and Design for Testability (DFT) processes and objectives are very often confused among experts in the two separate design discipline(s).

Design for Test is typically performed solely for electronic design components (chips, circuit boards, sets of circuit boards) at the lowest levels of design. As a result, Design for Test is inadvertently performed at the expense of the broader vision of the test effectiveness or value at the fielded product (Integrated Systems’ Level). Whereas Design for Testability can reuse the investment into Design for Test to validate the test coverage effectiveness at the higher and highest levels of the fielded design.

Evaluating the Test Coverage of the BIT

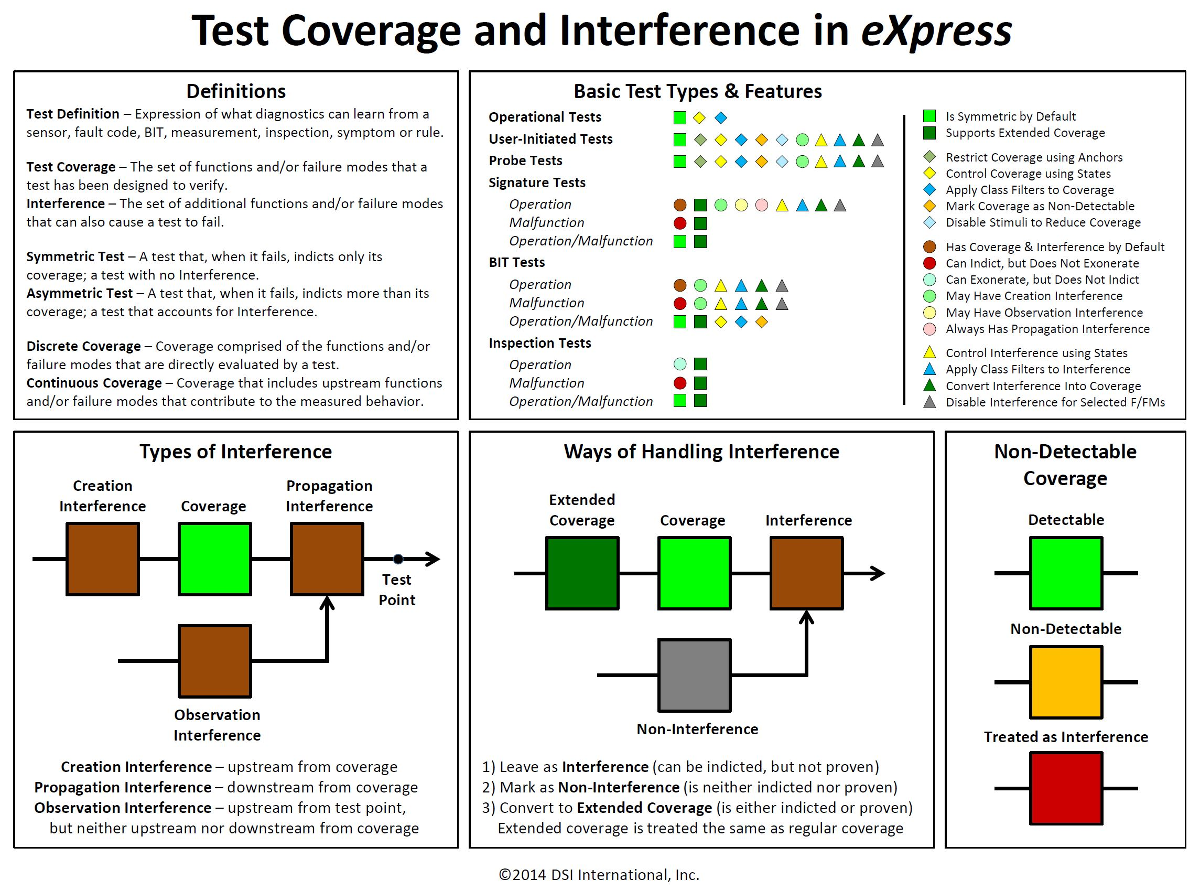

The test coverage is very effectively captured and fully represented in the eXpress modeling paradigm. The BIT test coverage for any BIT at any level may have one of three (3) types of Test Coverage Interference, that is, some “actors” that may obstruct the precision of the failure(s) being observed by the sensors or reported by the BIT.

“Interference” with test coverage at the higher levels of the full integrated systems design may not be known early enough during the design development lifecycle, nor by the design team or individual at the lowest levels of the design.

Test Coverage Uncertainty in RCM Approaches to Maintenance

Reliability-Centered Maintenance, or “RCM”, which is often referred to as “Scheduled Maintenance”, is not typically concerned with the knowledge of “Test Coverage” during design development. When “on-condition” maintenance inspections occur as an augmentation of RCM, the approach inadvertently infers the limited use of “Test Coverage” in the sustainment paradigm. That said, RCM is defined during design development and relies upon a separate discipline (Maintenance Engineering) to determine the extent (scope and frequency) of the application of “on-condition” maintenance inspections.

Without Diagnostics Engineering playing an integral role with RCM, “Test Coverage” is dismissed and RCM essentially becomes a contributor to the deploying of a segregated and independent maintenance philosophy.

RCM contributing to NFF’s, CND’s and RTOK’s

Even advanced approaches that use “augmented” RCM techniques, such as “on-condition” Maintenance” methods to add Condition-Based Maintenance (CBM) to the existing RCM approach lack the certainty of “Test Coverage”, which may negatively impact Fault Isolation certainties.

Fault Isolation Effectiveness is essentially dismissed and not typically a concern of RCM during design development. This practice has traditionally been a major contributor to such symptoms of ineffective sustainment as “No-Fault Found” (NFF), “Can Not Duplicate” (CND), “Re-Test OK’s” (RETOK) and a host of other cost drivers resulting from inaccurate or unnecessary replacement activities.

RCM is designed around a requirement to schedule replacements of components before a failure is detected or before a critical failure may result from combined non-critical failures. The discipline requires (MIL-STD 1629A) the identification of the lowest level failure modes for any specified “component(s)” within that design and the resulting “failure effects” for each level of the design architecture.

Although the FMECA provides a method to describe the detection method(s) for the observance of any (impending or “hard”) failures, there is no requirement for the Reliability Engineer to include any restrictions upon that detection method as may be constrained by the integration of any other design along with other designs to be integrated into a hierarchical systems design. The assumption is that the required FMECA product is independent level of the design and once delivered, it is accurate to its independent piece of the systems design.

Types of Test Coverage and Interference

The Charts below describe the three (3) types of test coverage interference and how its easily managed in eXpress:

Diagnostic Validation

Traditionally, designs have been developed with little attention to discovering the design’s diagnostic integrity. Engineers are overwhelmed with many tasks while Program Management hasn’t really understood the widespread value of diagnostic engineering.

Designing for Test (DFT), Health Management (PHM, ISHM, etc.) or multiple sustainment levels, is a more complex task today. It ultimately embodies a more integrated systems’ undertanding of the “Test Coverage” sophistication when considering the incorporation of many designs across a complex hierarchical design architecture.

Likewise, Designing for Test on medium to complex CCU’s, such traditional DFT or any indepenent Test Coverage analysis fails to provide Diagnostic conclusions – at any level or in consideration of the fully fielded product application. Once integrated with other designs and fielded, any test results obtained for use with any on-board BIT or for any continued, secondary level (Depot or ground maintenance) will be dependent on the diagnostics integrity of the “integrated systems’ design – regardless of the “Test Coverage” specs described in the static DFT analysis.

This is where we experience and rely on the “Diagnostic Integrity” of our design(s). The diagnostic integrity is dependent on the design domain mix involved, its complexity and the ability to evolve as the operational environment or implementation changes. Fortunately, DSI Diagnostic Validation capability using the DFI feature in eXpress has no difficulty in considering all of these complexities to exhaustively determine the diagnostic effectiveness of any complex or large-scale (integrated systems’) design(s).

Validating the BIT Test Coverage and Create/Assign Fault Groups to the BIT

After the BIT is fully validated (using the advanced eXpress Diagnostic Validation, or Diagnostic Fault Insertion capability), the integrated designs will be capable of performing to the diagnostic precision at any level(s) of the diagnostic design hierarchy as validated in eXpress.

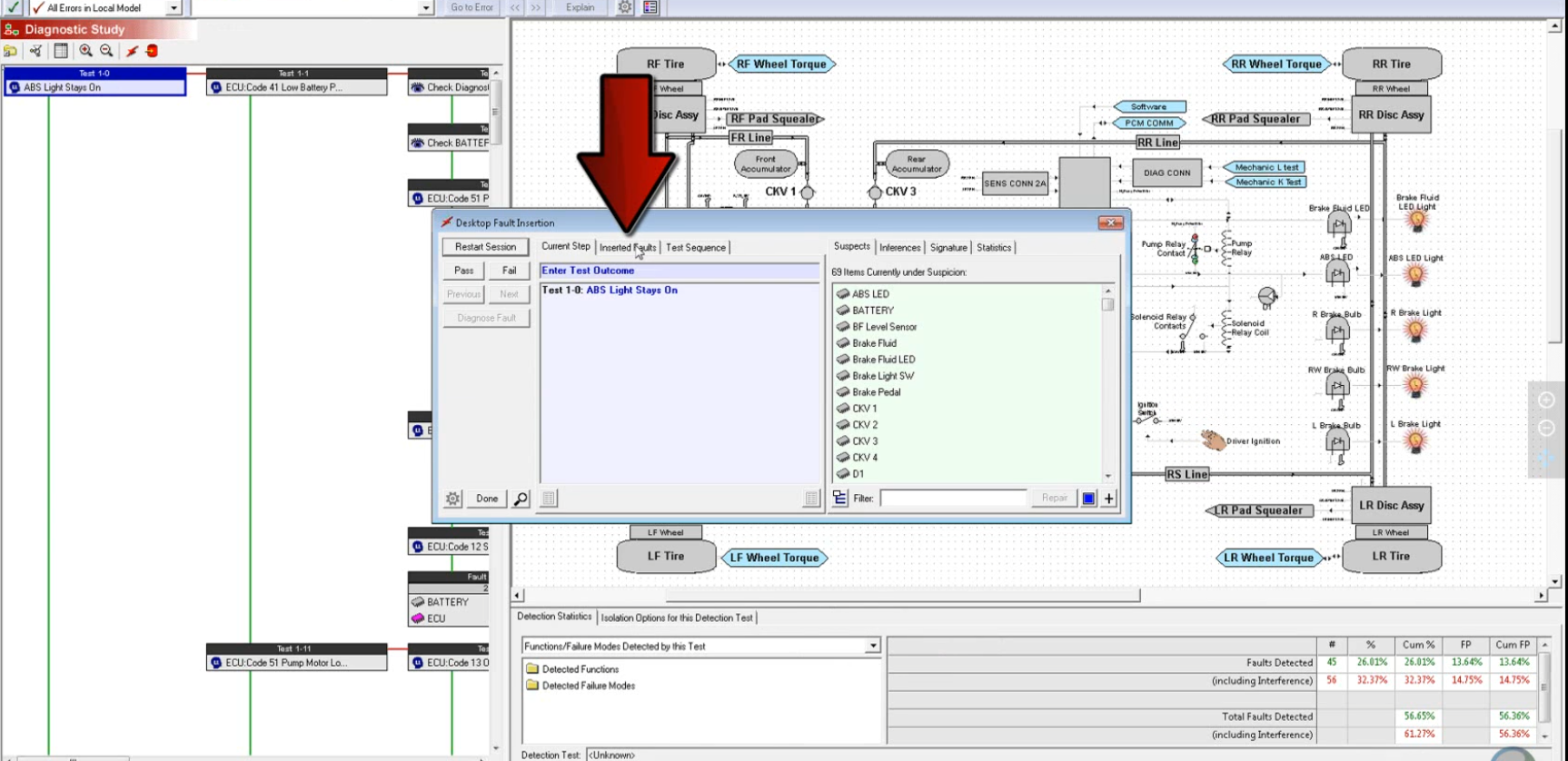

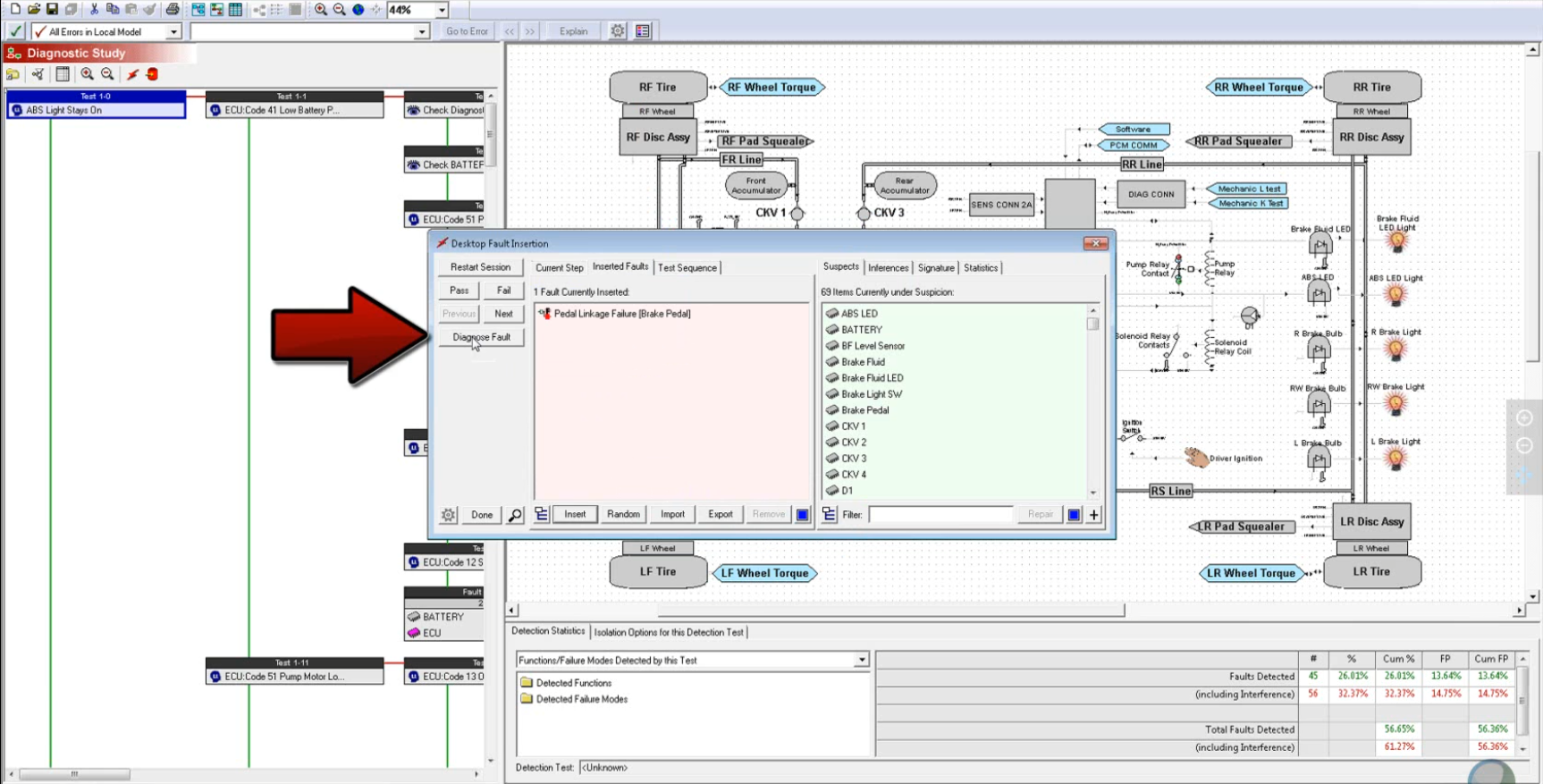

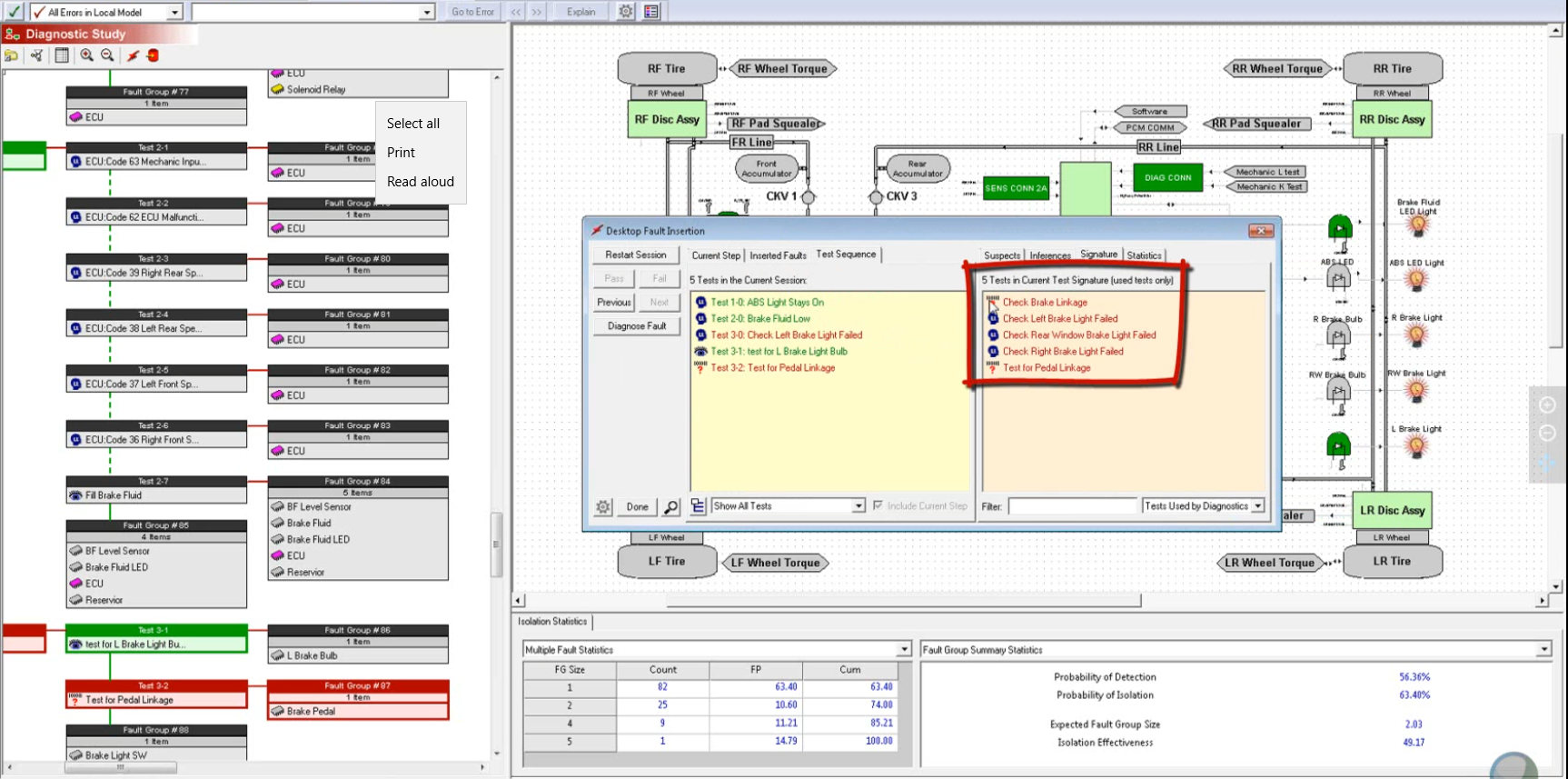

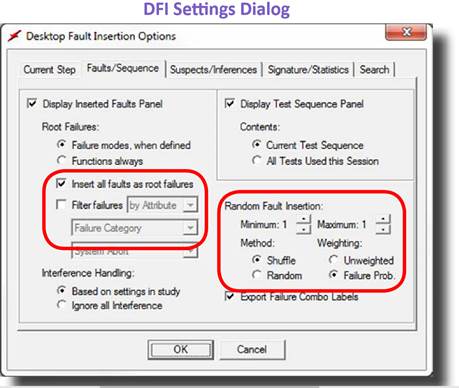

The following five (5) images show a sneak peek at the robust eXpress “DFI” capability:

Step 1: Insert any fault(s):

Step 2: Diagnose Fault(s):

Step 3: Discover Test Signature to arrive at Inserted Fault(s):

In the images above, the eXpress DFI feature allows the user to fully trace the entire path and/or diagnostic inferences produced across all hierarchical levels of the diagnostic design architecture. This is how the full impact of the Test Coverage is observed both from a diagnostic sequencing perspective (diagnostic tree on the left) and the comprehensive visual perspective using the design window on the right.

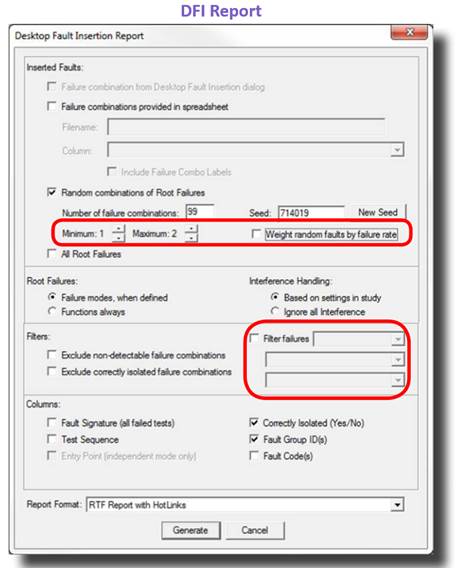

The next two (2) images describe some the scope and depth of the Fault Insertion capability:

This eXpress DFI Diagnostic Validation capability allows the filtering of inserted faults by severity (so that only failure modes that propagate to an end effect of a certain minimum severity are inserted) or by attribute (so that only failure modes with a given attribute value are inserted). This feature—which impacts both explicit faults selection and randomly-generated faults—allows you to focus diagnostic validation efforts on more critical failures, or failures of a certain type.

By default, random fault insertion works in “shuffle” mode, which prevents faults from being inserted more than once until all other faults have been inserted.

The image above shows the versatility of allowing the user to generate reports that can be pushed off to a spreadsheet and then enable an interoperable capability to reimport from the spreadsheet to seed future diagnostic sessions as configured for any demo or any other purposes!

The DFI Reports offer a seamless and comprehensive mechanism that will track and report on any details for any inserted faults. This is a unique and interactive capability that can only be found within eXpress and DSI’s ISDD.

While there are an infinite number of uses (see image below) and benefits from the ability to validate that diagnostic quality of any design, or collection of integrated designs, the most valuable impact can be gained during design development.

Fault Code Assignment, Integration & Management

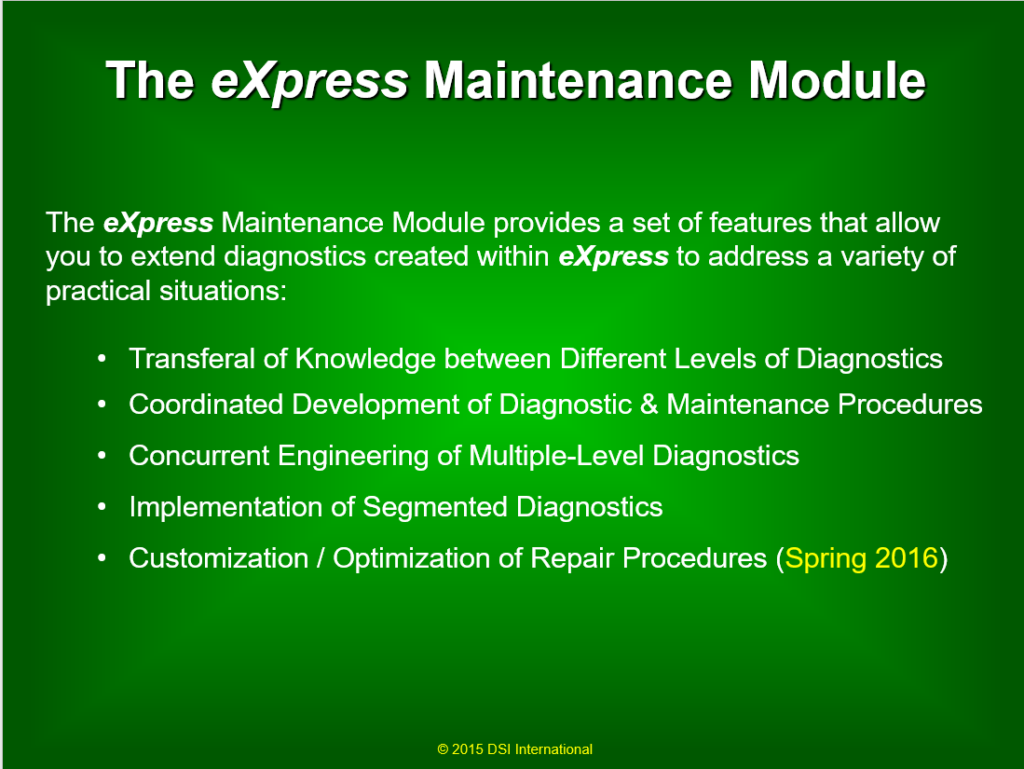

Within the eXpress and Integrated Systems Diagnostic Design (ISDD) environment, the assigning and managing of the Fault Codes for the BIT throughout the design development of the Integrated Vehicle Health Management (IVHM) is a rather simple and error-free integrated process. To perform this seamless capability for any IVHM or Integrated System Health Management (ISHM) implementation, the ancillary eXpress Maintenance Module is required. The approach described below highlights some of the procedural steps to prepare the eXpress model for this additional purpose and benefit.

Maintenance Module Interoperability

The eXpress Maintenance Module is an advanced capability that leverages the vetted BIT test coverage described within eXpress and prepares it for use in operational and sustainment environment(s). As the demand in industry continues to expand for this technology, the eXpress Maintenance Module will continue to evolve to accommodate a myriad of advanced run-time diagnostic requirements.

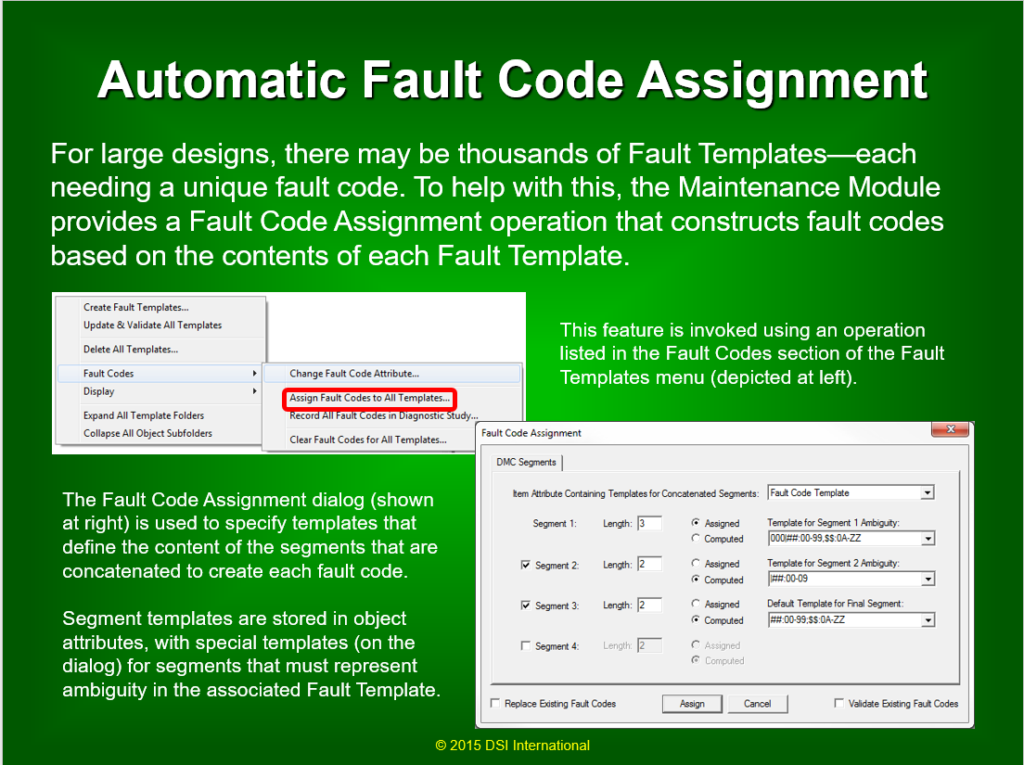

Auto-Assign the Fault Codes:

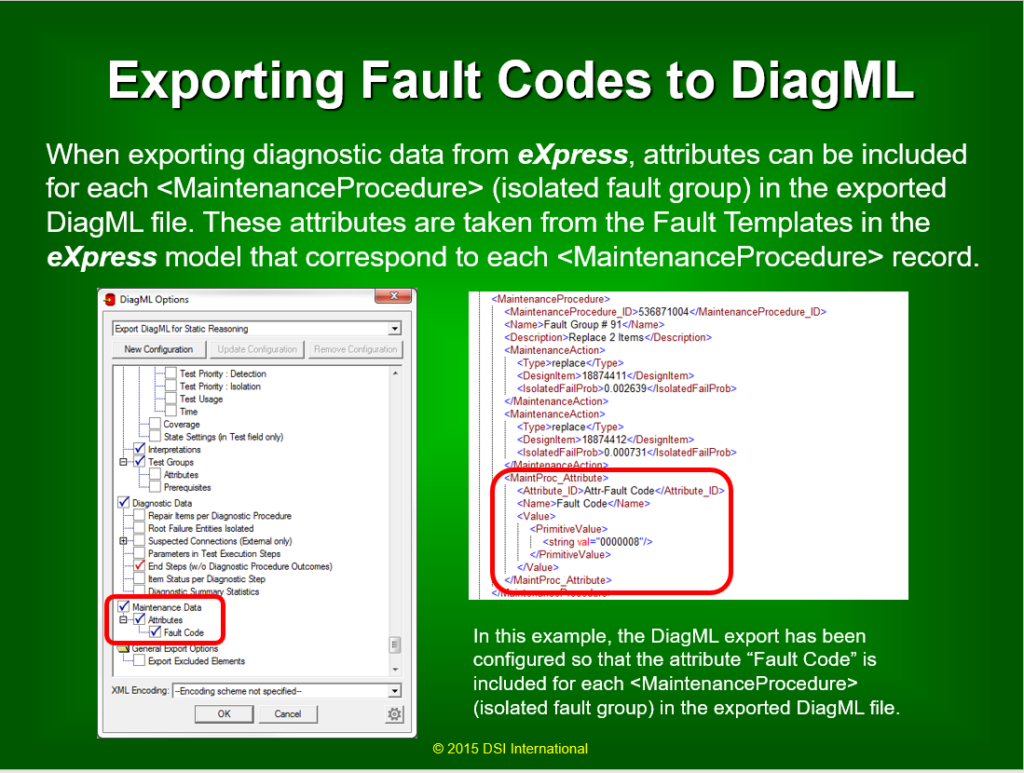

Once the BIT has been fully validated throughout the diagnostic design hierachy, the Fault Codes can be “auto-assigned” to each Fault Group, or to whatever sustainment paradigm is deemed appropriate for the project. Refer to the two (2) images below that describe the Assignment of the Fault Codes and then exported to the sustainment paradigm.

Transferal of Diagnostic or BIT Test Coverage Knowledge to the Operational Environment

At this point, we simply transfer this knowledge to the field. This can be performed for an array of implementations as described in the following image.

Related Articles and Presentations:

Optimized Sensor Placement Strategies in eXpress to Reduce False Alarms

Boundary Scan in eXpress

The eXpress Maintenance Module

Test Types in eXpress

Related Videos:

BIT to Guided Troubleshooting

Diagnostic Validation Through Fault Insertion

COTS-Based Solution for Through-Life Support