Solutions

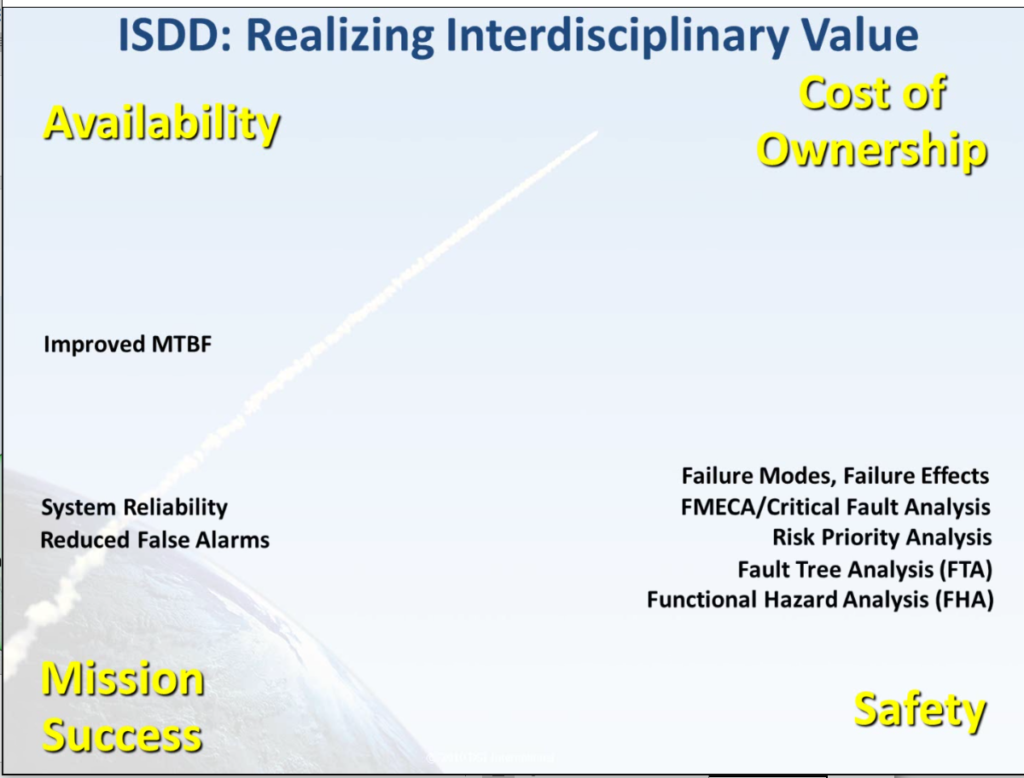

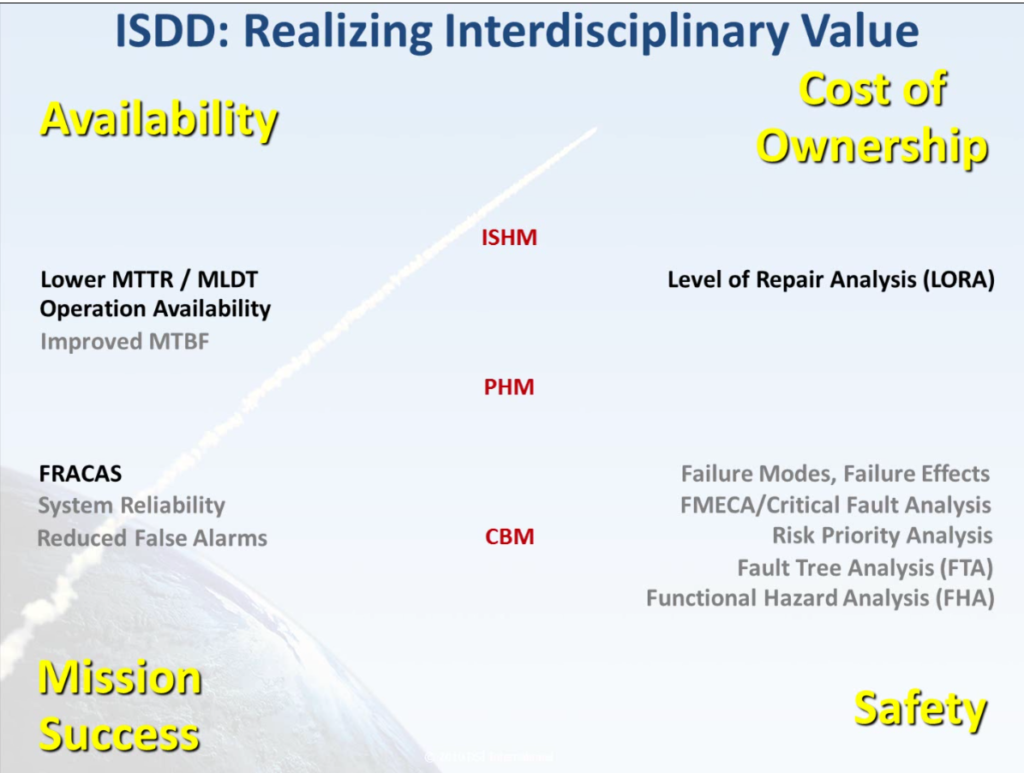

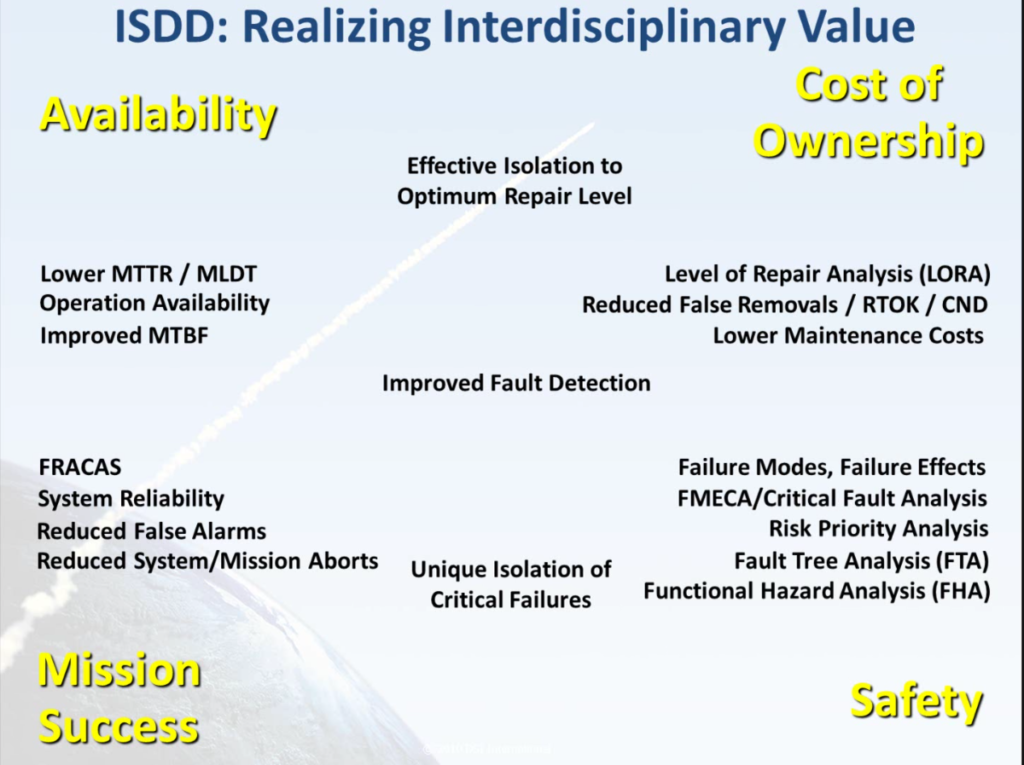

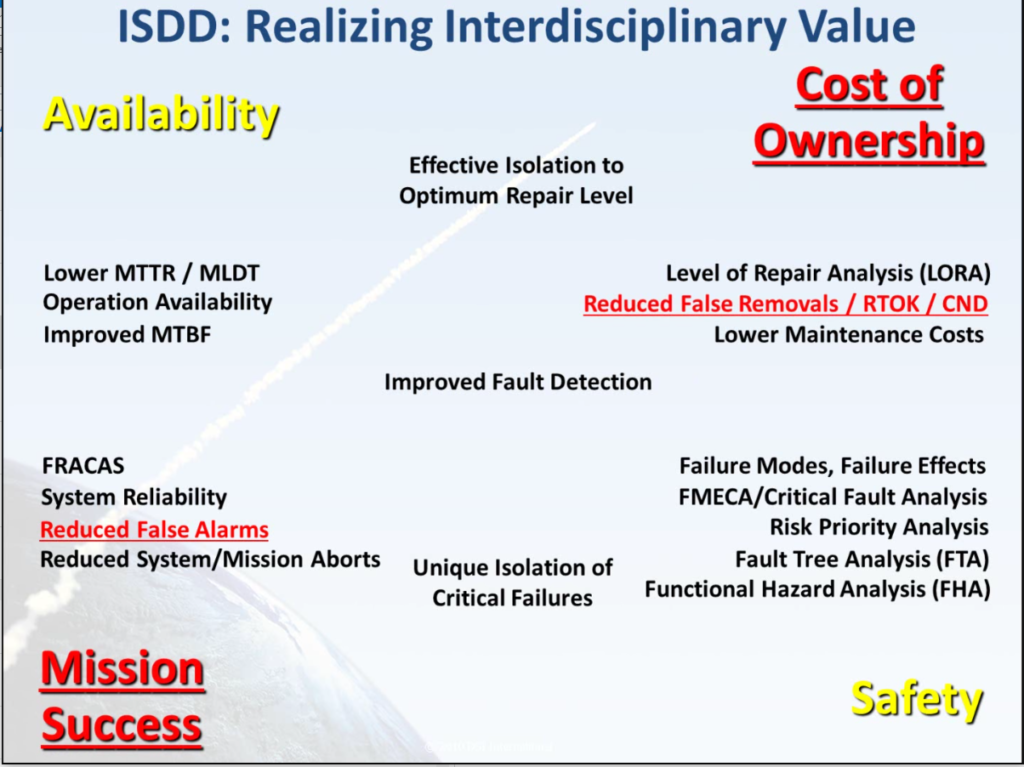

ISDD – Realizing Interdisciplinary Value

As systems continue to increase in both size and complexity, the ability to drill down and find the failure root causes continues to be a growing challenge. Many of the traditional methods to compute low-level reliability or maintainability statistics in complex designs and bring this data to the system level to address system requirements conformance is becoming increasingly costly and challenging.

Competing Among Partnering Design Disciplines?

While contributing lower level designs may be developed to satisfy specific reliability and maintainability requirements, the complex integrated system should also be able observe and manage failures within integrated systems expeditiously, effectively, affordably, and safely. The following discussion identifies areas within traditional design development processes that systemically contribute to a growing sustainment challenge resulting from pursuing independent and often competing, interdisciplinary assessment objectives.

Integrated Systems Diagnostics Design ISDD: Creating Interdisciplinary Design “Trade Space”

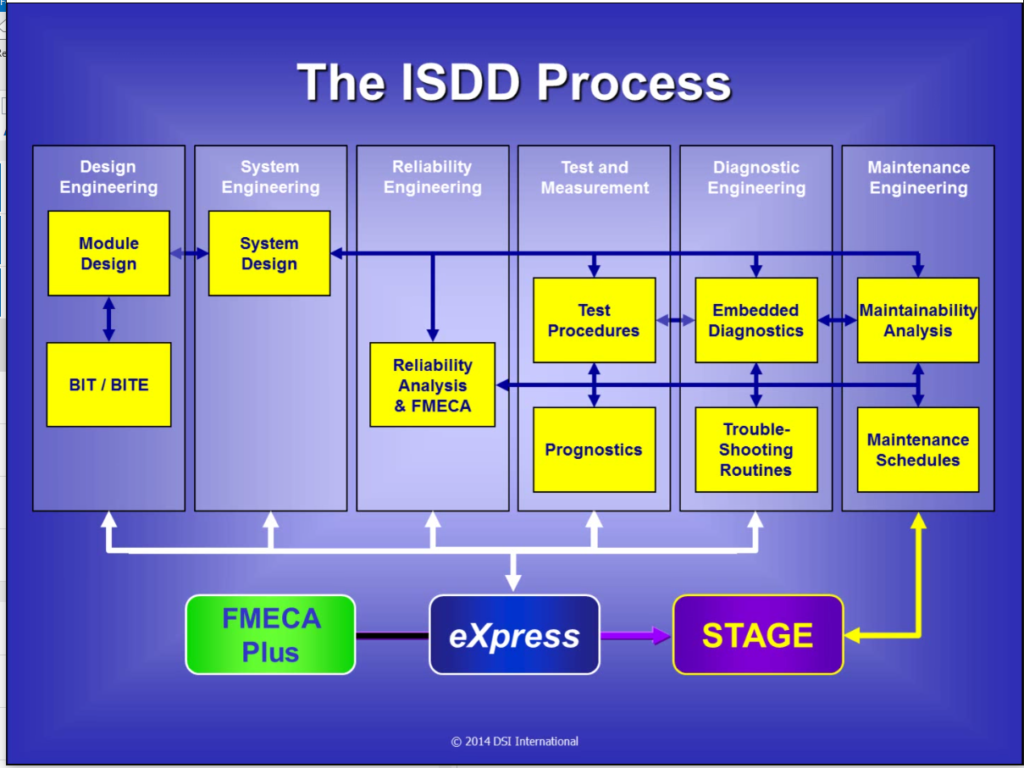

The extensive, corroborative nature of the Integrated Systems Diagnostics Design (ISDD) paradigm provides an interdisciplinary “trade space” by uncovering untapped Return on Investment (ROI) by balancing as well as reusing design assessment products and data artifacts more inclusively and seamlessly within the implemented maintenance solution.

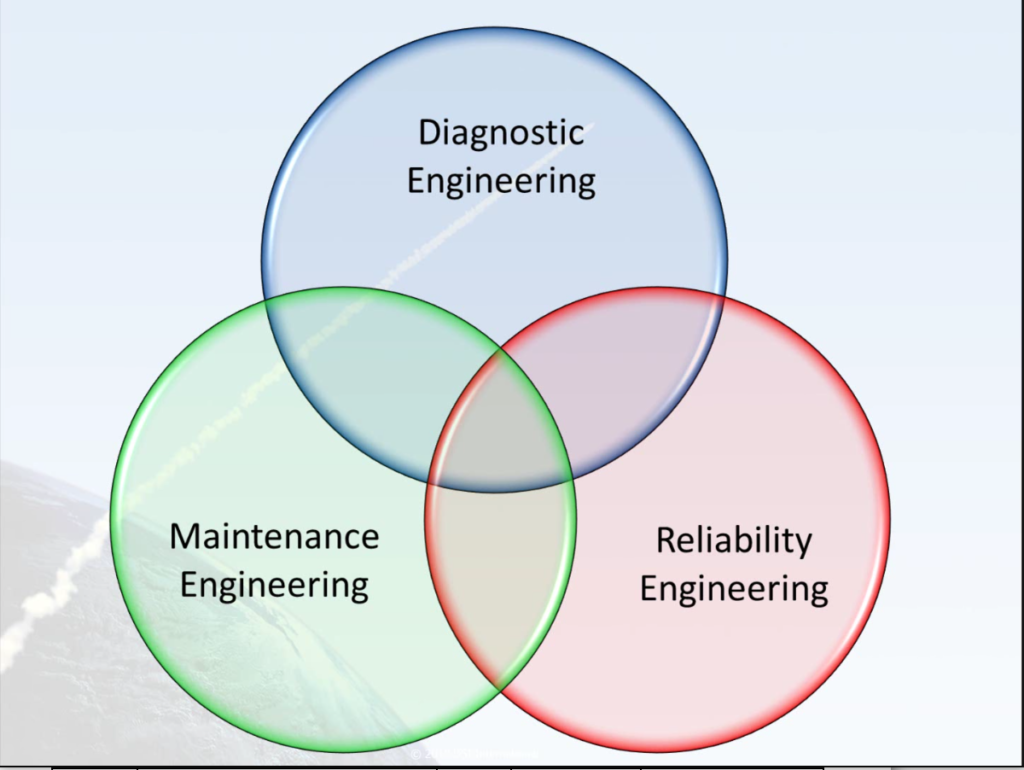

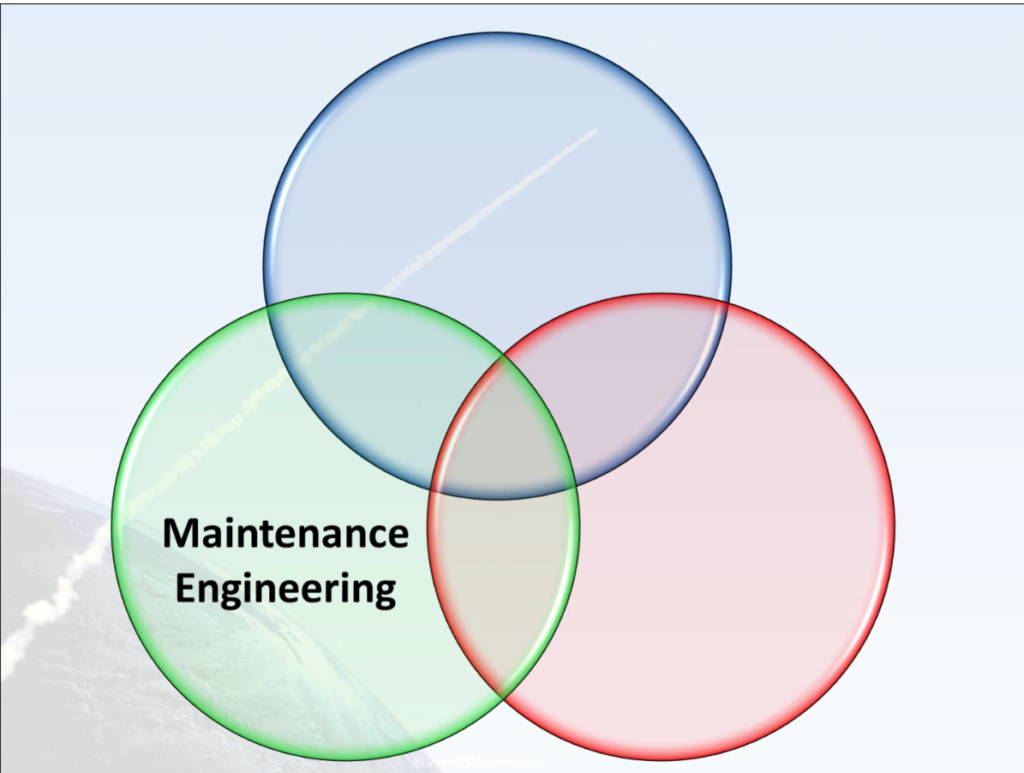

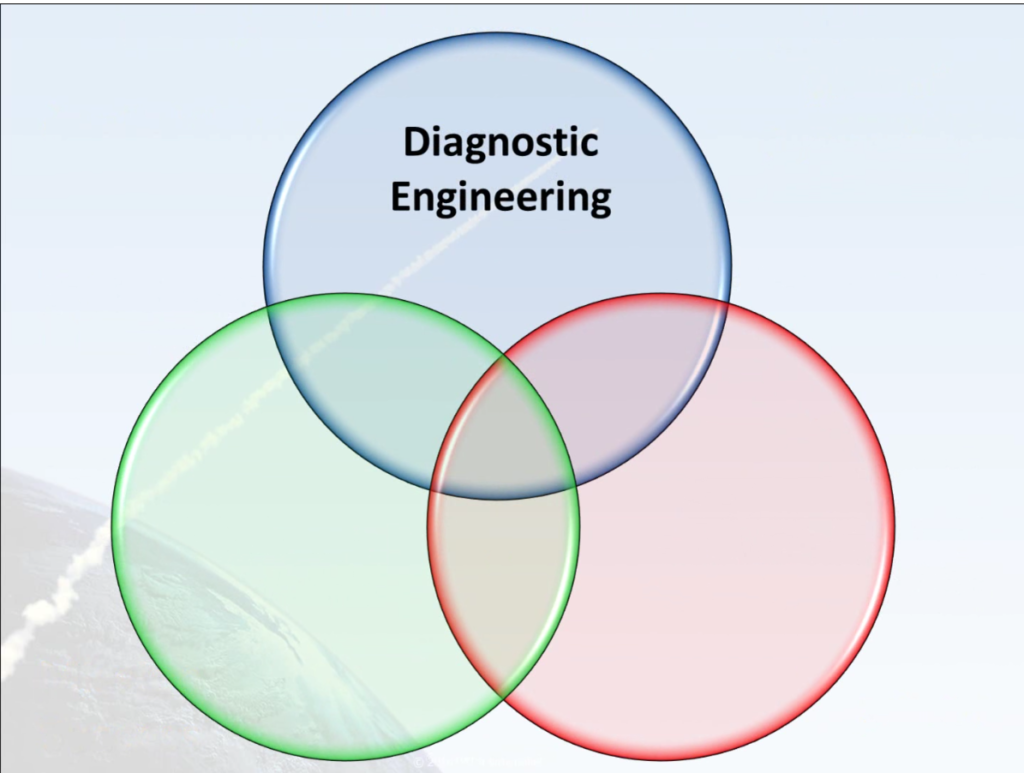

In the Venn diagram illustrated in this discussion, each traditional design discipline is represent as its own circle, and then the general objectives of each discipline is noted. Consequently, independent assessments products can be characterized to show how the sustainment goals of fielded system is addressed and serviced.

Contribution from Reliability Engineering

Beginning with the circle that represents the reliability design discipline, we can see how each discipline is able to address its focused approach. In a large complex integrated system, reliability engineering is the essential analysis process that must be addressed concurrently with other design disciplines, and should be started early in the product development phase. It can be performed extensively and consistently, or it might be performed to reflect the individual talents and preferences of the individual engineer.

In complex systems, reliability analyses are critical for assessing a system’s ability to address and meet design requirements. This may typically involve many tools and techniques used that often vary from organization to organization. Consequently, interoperability between heterogeneous reliability assessment tools and products remains a challenge. That might be between subsystem designs, addressing the goals of an integrated system, or emphasize a fielded product level (Availability, Cost of Ownership, Mission/Operational Success and Safety); however, the stated requirements only are concerned with one set of criteria.

Fundamentally, Reliability Engineering contributes by performing analyses that will best predict the expected reliable serviceable life for each component used in the system. This discipline must also account for how, when, and the critical nature of any of the failures for any of system components. In addition, it often evaluates what would be the result or impact from the failure throughout the integrated system. While this is an over-simplification, this is the basis for computing many reliability products such as component or design failure rates (FR), failure modes (FM), failure effects (FE), mean time between failures (MTBF), criticality or severity of failure as well as a number of higher level reliability-based assessment products including the failure mode effects and criticality analysis (FMECA,) Risk Priority Number (RPN), and the Fault Tree Analysis (FTA).

Contribution from Maintenance Engineering

Maintenance Engineering contributes the information on how the design is to be maintained when any failure occurs or before a failure is expected to occur to ensure that the product or system is able to perform or function properly when it becomes needed for operation.

Additionally, maintenance engineering must provide the knowledge on the expected duration of a corrective maintenance action or repair activity, which may include the time expected to isolate, remove, procure, and replace the failed item. While this is an oversimplification, it is the basis for computing such products as mean time to isolate (MTTI), mean time to repair (MTTR), mean logistics delay time (MLDT) and Operational Availability (OA); just to state a few of the more recognizable metrics.

Contribution from Diagnostics Engineering

Diagnostics Engineering is a process that is best when implemented at the very earliest stages of design development in order to influence the design for sustainability. This is a tremendously valuable opportunity that plays a significant impact on the effectiveness of any selected sustainment approach including Design For Testability (DFT), On-Board Health Management or any Guided Troubleshooting paradigm(s) or evolving maintenance philosophies.

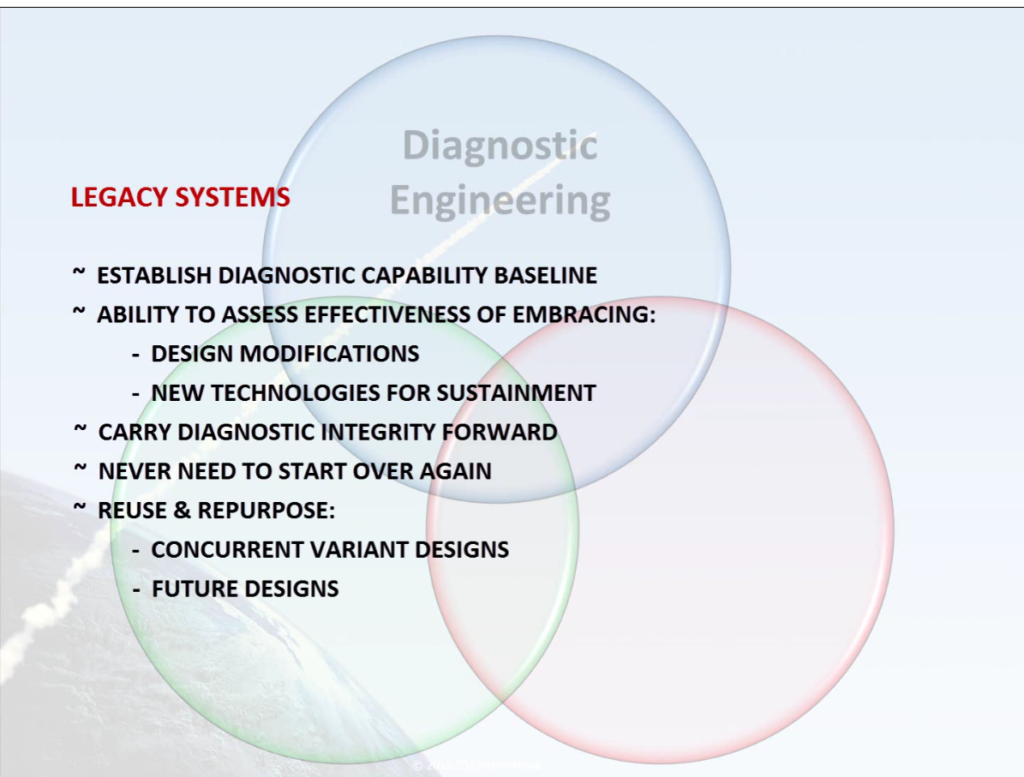

Even When that opportunity is not immediately available, diagnostic engineering can still enrich the ongoing diagnostic capability of complex legacy systems. Initially, this is accomplished by establishing the design’s diagnostic capability through a Fault Detection and Fault Isolation (FD/FI) baseline. That immediately ensures that the design is capable of embracing of future design modifications that require the integration of evolving and new technological advancements in testing or sustainment paradigms. Eventually, the captured design allows the diagnostic assessment data to be reused on related future designs or repurposed to for a wide variety of future cost leveraging opportunities.

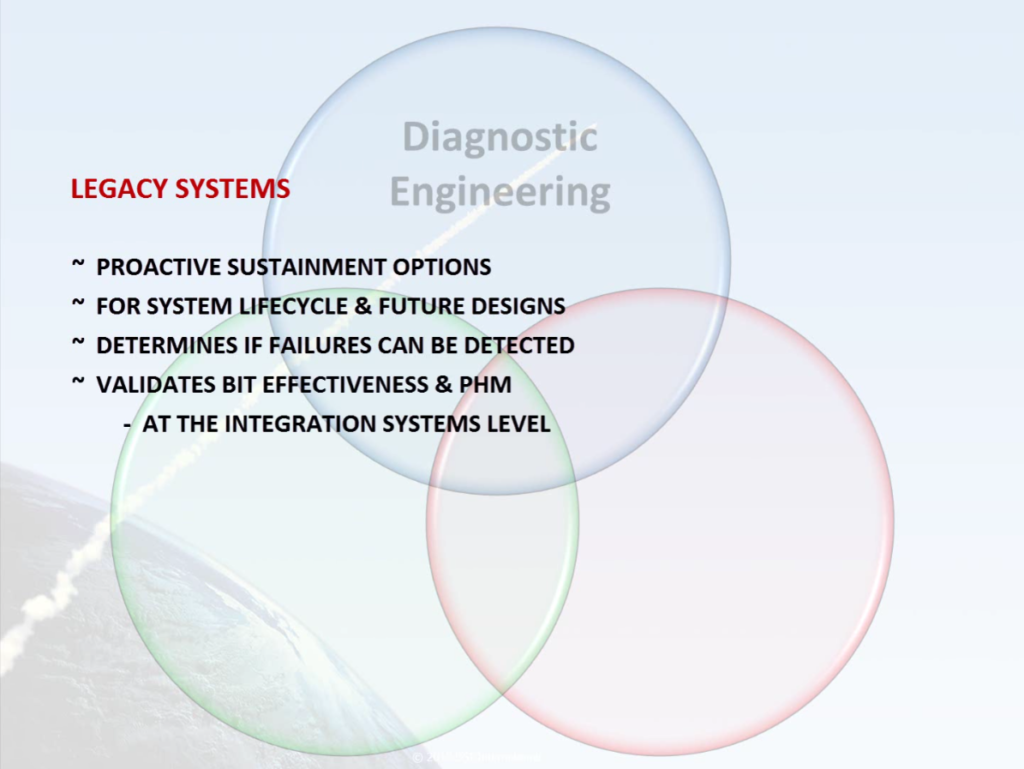

Diagnostic Engineering is a discipline that provides proactive sustainment options for future designs paying dividends throughout the lifecycle of the fielded product or system. It has tremendous impact on the utility of the data products produced from the investment into the reliability and maintenance engineering efforts.

This discipline determines if failures can be detected or isolated and then how to optimally devise an approach to observe or discover those failures to ensure the correct failure is indicted and remedied in a timely fashion. It offers unmatched insight to the validation of BIT effectiveness and any health management or PHM design integrity at the integrated systems level.

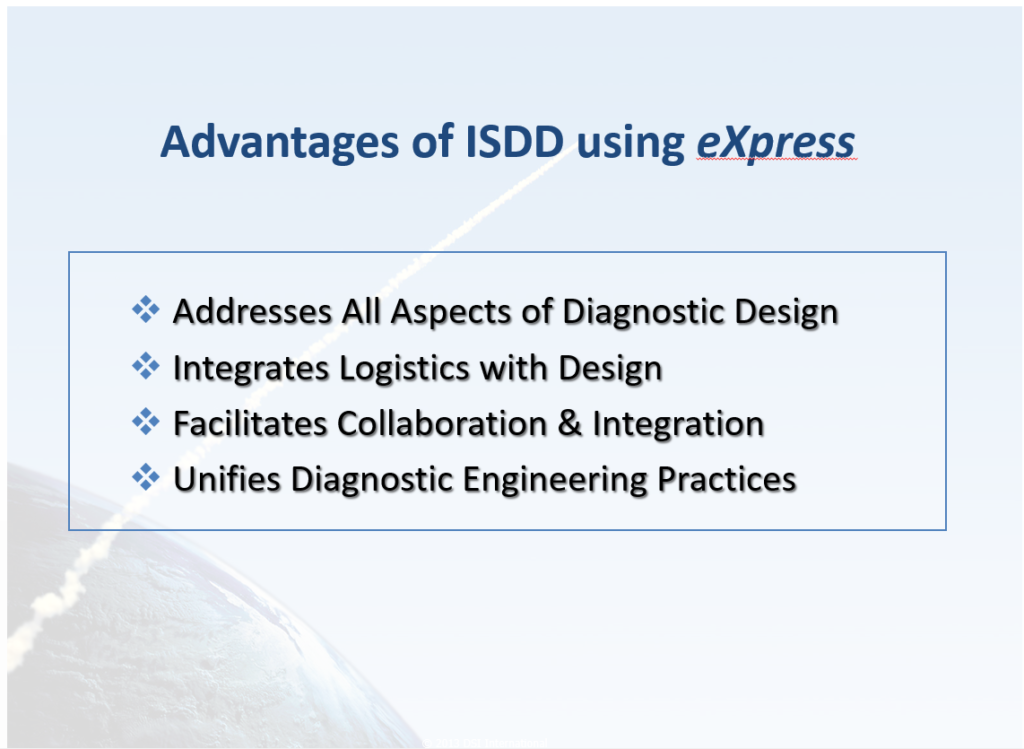

Diagnostic Engineering: Addresses All Aspects of Diagnostic Design;

Integrates Logistics with Design; Facilitates Collaboration and Integration;

and Unifies Diagnostic Engineering Practices

While legacy designs can be greatly enriched at any time by capturing the design knowledge in a form that can be leveraged for continued system lifecycle diagnostic benefit, it is also true that captured design knowledge can also provide a jump start in concurrent variant designs and new designs.

Ideally, the ISDD activity needs to be performed iteratively within the development process so that it can be used to assess and influence the design from a diagnostic perspective ensuring that the goals that it shares with reliability and maintainability engineering are balanced and maximized continuously throughout the life-cycle of the fielded system.

Diagnostics Engineering – Being Proactive EARLY in Design Development

The early involvement of diagnostic engineering will ensure the design is influenced for sustainment approaches because the assessments from all of these design discipline are no longer “marooned” to solely address requirements – instead these assessments products work to form a “balanced” knowledgebase “asset” that is able to be carried forward directly into being an active role-player in the implementation of the sustainment solution.

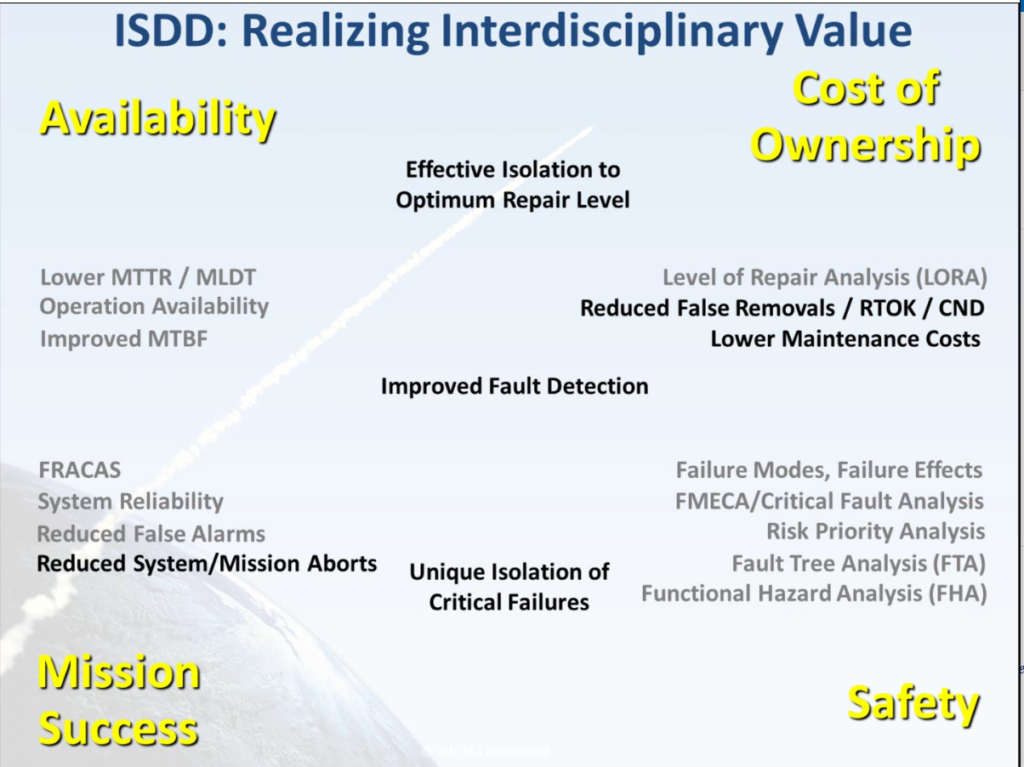

The timely performance of diagnostics engineering provides: improved Fault Detection, reduced False Removals/Re-Test OK (RTOK)/Can Not Detect (CND), reduced False Alarms, reduced Systems/Mission Aborts, lower maintenance costs, effective isolation to optimum repair level, Operational Availability (OA). This use of diagnostic engineering makes possible the ability to uniquely isolate critical failures—in other words, to proactively discover where tests points or sensors are unable discern between the root causes of any low-level component failure due to inherent integrated system design or health management constraints.

Realizing the Benefits of Seamlessly Enriching Corroboration Between Design Disciplines

When ISDD is performed within a corroborative design environment, systems, reliability, maintenance and diagnostics engineering activities engage in data sharing and leveraging in a synchronized manner, using identical and shared data artifacts – repeatedly cross-checked for integrated design “fitness” in terms of consistencies, completeness and omissions, while being performed much earlier within the design development process.

Metrics owned by each independent discipline, have traditionally served to score or assess how each discipline is able to meet its independent disciplinary requirements. In this traditional manner, it is difficult to realize how these independently-owned requirements often serve cross-purposes in their competing against those metrics and requirements serviced by the companion disciplines.

An Example of Competing Objectives

Perhaps there is an objective to reduce false alarms. As a result, mission success may be increased, but it may then lead to increase false removals. When the design was not optimized to allow for optimized fault detection, which may thereby increase the cost of ownership in terms of requiring excessive spares for non-failed components—those with a substantial remaining useful life. Since diagnostic engineering analyses provides the knowledge of fault group constituency, we would then know when the constraints of diagnostic capability are approached, and then must remove a fault group, or set of suspected failed items in order to remediate. However, to minimize investment in diagnostic engineering, such replacements may not be correct and consequently not fix the failures or even ‘mask’ the non-detectable failures and thereby increase loss of system availability. Many of these valuable assessments are not possible without the inclusion of high-end diagnostic engineering.

ISDD Provides a Gateway to Incorporating Lessons Learned

Learning from fielded experiences is useful, but it is a reactive measure. As we move towards more complex or critical systems, diagnostic engineering provides proactive design influence and will always be of tremendous value that keeps on returning benefits—specifically when worked in the Integrated Systems Diagnostics Design, or ISDD as a corroborative development process.

This enables many traditional and commonly pursued value-added design development and sustainment benefits to be optimized nearly inadvertently

- Requirements derivation

- Requirements flow-down

- Design development

- Test point enhancement

- Design and Diagnostic Optimization

- Prognostic and On-Board Reasoner development –

- On-Board to Off-Board Diagnostic Savvy

- Integrates IVHM with Maintenance Environment

- Embedded Systems Integration

- Integrates Logistics with Design and Field Expertise

- Facilitates collaboration and integration

- Unified Diagnostic Engineering practices

- Transferrable to Successive and Concurrent Variant Systems

ISDD ensures an approach that collectively services the interests of each independently-owned design discipline—inter-disciplinary or inter-organizationally throughout the design development process, balancing to the overall goals of the integrated system design. Through this nearly inadvertent “corroborative” data-sharing and leveraging environment, we slice through the “competitive” nature of each independently owned discipline and discover new value for starting ahead on every follow-on development. This is true for concurrent variant designs, or for ensuing new designs and evolving sustainment supporting technologies.

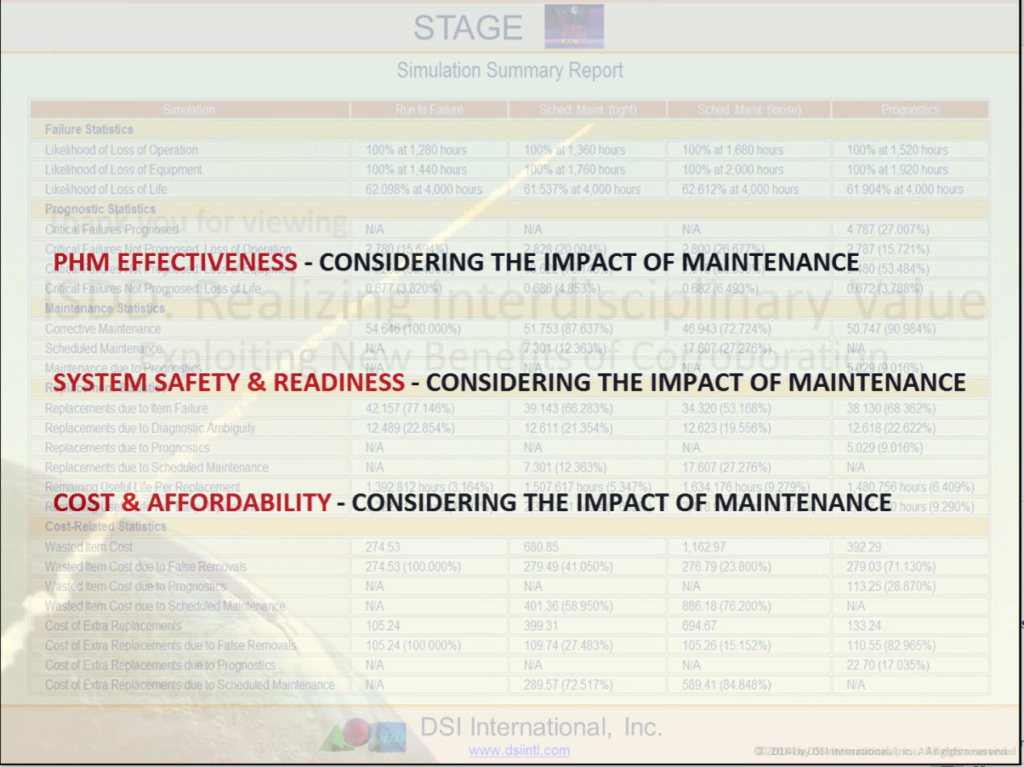

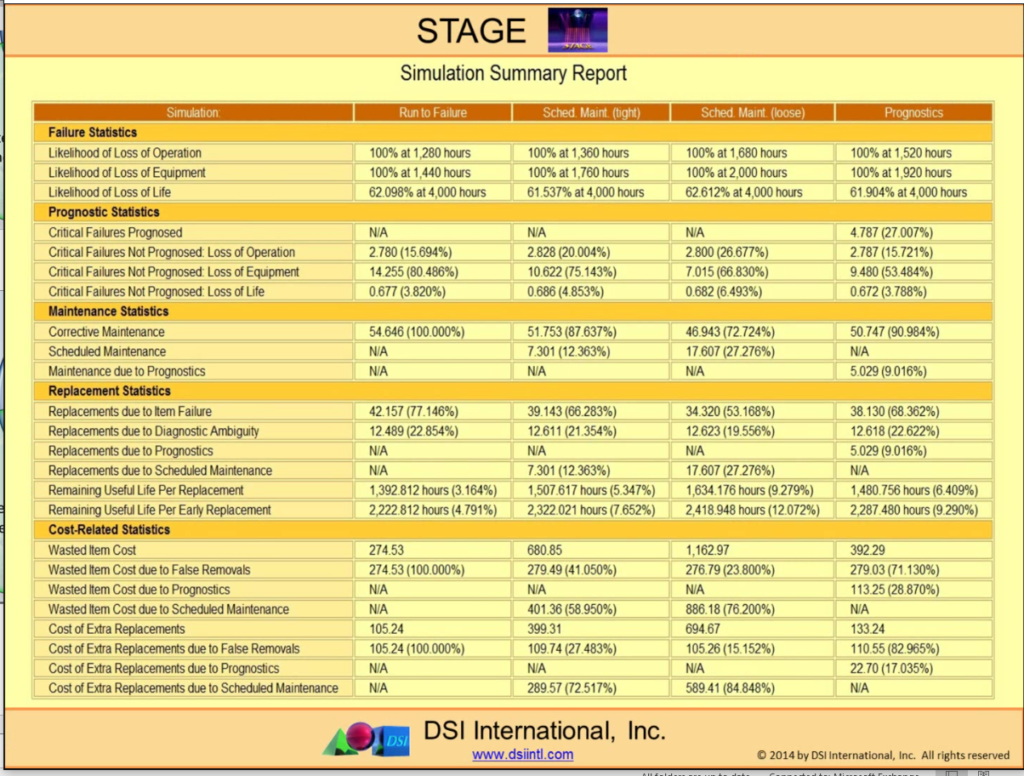

“STAGE” Operational Simulation Results in accordance to the Diagnostic Effectiveness as Realized by the Fielded System per “Traded” Maintenance Paradigms